Imagine you're all alone in a low-lit parking lot when a big, white robot rolls up to you and offers its protection.

Congratulations: You just met the Knightscope K5, the latest in pre-crime technology!

Weirder things have happened, right? I mean, not many weirder things, but definitely some weirder things. GIF via Knightscope/YouTube.

It might look like the lovechild of R2-D2 and a Dalek, but the K5 is actually the world's first "autonomous data machine." (At least according to the press materials.)

What this actually means is that it roves around parking lots in Silicon Valley using facial recognition software to identify potential criminals, broadcasting massive amounts of information back to the company's private data center, and generally policing through (admittedly adorable) intimidation.

And what's more, this shiny robot cop can be rented out for as low as $6.25 an hour.

All in a day's work. GIF from "Robocop."

Are you feeling like you're living in the future yet? 'Cause the K5 ain't the only tech that allegedly stops crime before it happens.

Back in 2008, the Department of Homeland Security created the Fast Attribute Screening Technology, or FAST. Originally known as Project Hostile Intent (can't imagine why they changed it?), this data-crunching program uses physiological and behavioral patterns to identify individuals with potential to commit violent crimes.

And in September 2015, Hitachi released its fancy new Predictive Crime Analytics, which uses thousands of different factors from weather patterns to word usage in social media posts to identify when and where the next crime could happen.

"We're trying to provide tools for public safety so that [law enforcement is] armed with more information on who's more likely to commit a crime," explained Darrin Lipscomb, one of the creators of this crime-monitoring technology, in a Fast Company article.

He also said, "A human just can't handle when you get to the tens or hundreds of variables that could impact crime."

Sounds an awful lot like a certain Spielberg action movie starring a pre-couch-jumping Tom Cruise.

Speaking of curious behavioral patterns... GIF from "Minority Report."

So what are we waiting for? Let's use all this awesome new technology to put a stop to crime before it starts!

Except ... it's not "crime" if it hasn't happened, is it? You can't arrest someone for trying every car door in the parking lot, even if you do feel pretty confident that they're looking for an open one so they can search inside for things to steal.

And even then: How can you tell the difference between a thief, a homeless person looking for a place to sleep, or someone who just got confused about which car was theirs?

GIF from "Robocop."

Besides: What if the algorithms used in this pre-crime technology are just as biased as human behavior?

Numbers don't lie. But numbers also exist within a context, which means the bigger picture might not be as black and white as you'd think.

Consider the stop-and-frisk policies that currently exist in cities like New York or the TSA screening process at the airport. Those in favor might argue that if black and Muslim Americans are more likely to commit violent crimes, then it makes sense to treat them with extra caution. Those opposed would call that racial profiling.

As for the supposedly objective predictive robot cops? Based on the data available to them, they'd probably rule in favor of racial profiling.

Author/blogger Cory Doctorow explains this problem pretty succinctly:

"The data used to train the algorithm comes from the outcomes of the biased police activity. If the police are stop-and-frisking brown people, then all the weapons and drugs they find will come from brown people. Feed that to an algorithm and ask it where the police should concentrate their energies, and it will dispatch those cops to the same neighborhoods where they've always focused their energy, but this time with a computer-generated racist facewash that lets them argue that they're free from bias."

See how quickly this spirals downward? GIF from "Robocop."

Pre-crime measures might make us feel safer. But they could lead to some even scarier scenarios.

By trying to stop crimes before they happen, we actually end up causing more crime. Just look at all the wonderful work that law enforcement agencies have already done by inadvertently creating terrorists and freely distributing child pornography.

The threat of surveillance from robots and data analysts might stop a handful of crimes, but it also opens up a bigger can of worms about what, exactly, a crime entails.

Because if you think you have "nothing to hide," well, tell that to the disproportionate number of black people imprisoned for marijuana or any of the men who've been arrested in the last few years for consensual sex with another man. (And remember that not too long ago, interracial sex was illegal, too.)

Do you really want these guys showing up at your house when you're trying to get it on? GIF from "Doctor Who."

Hell, if you've ever faked a sick day or purchased a lobster of a certain size, you've committed a felony.

The truth is, we've all committed crimes. And we've probably done it more often than we realized.

Don't get me wrong. It's certainly exciting to see people use these innovative new technologies to make the world safer. But there are others ways to stop crime before it starts without infringing on our civil rights.

For starters, we can fix the broken laws still on the books and create communities that care instead of cultivating fear. There'd be a lot less crime if we just looked after each other.

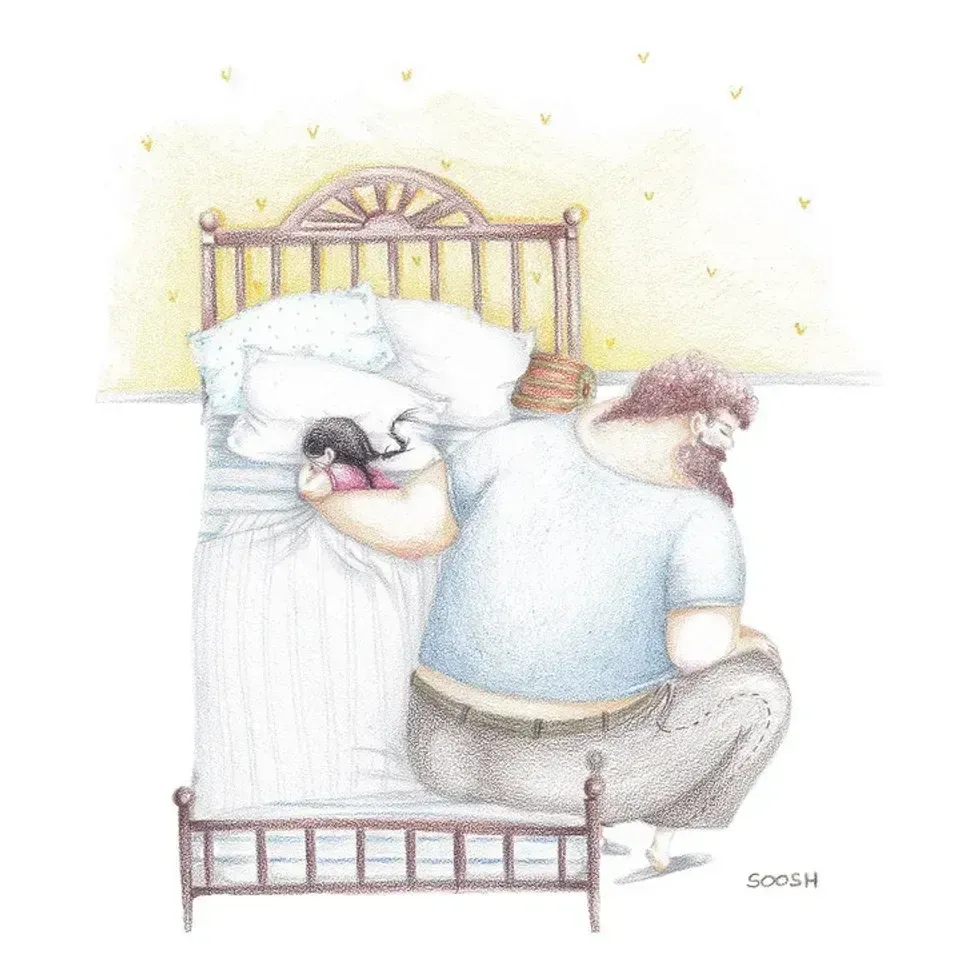

A father does his daughter's hair

A father does his daughter's hair A father plays chess with his daughter

A father plays chess with his daughter A dad hula hoops with his daughterAll illustrations are provided by Soosh and used with permission.

A dad hula hoops with his daughterAll illustrations are provided by Soosh and used with permission. A dad talks to his daughter while working at his deskAll illustrations are provided by Soosh and used with permission.

A dad talks to his daughter while working at his deskAll illustrations are provided by Soosh and used with permission. A dad performs a puppet show for his daughterAll illustrations are provided by Soosh and used with permission.

A dad performs a puppet show for his daughterAll illustrations are provided by Soosh and used with permission. A dad walks with his daughter on his backAll illustrations are provided by Soosh and used with permission.

A dad walks with his daughter on his backAll illustrations are provided by Soosh and used with permission. a dad carries a suitcase that his daughter holds onto

a dad carries a suitcase that his daughter holds onto A dad holds his sleeping daughterAll illustrations are provided by Soosh and used with permission.

A dad holds his sleeping daughterAll illustrations are provided by Soosh and used with permission. A superhero dad looks over his daughterAll illustrations are provided by Soosh and used with permission.

A superhero dad looks over his daughterAll illustrations are provided by Soosh and used with permission. A dad takes the small corner of the bed with his dauthterAll illustrations are provided by Soosh and used with permission.

A dad takes the small corner of the bed with his dauthterAll illustrations are provided by Soosh and used with permission. If you think socks are socks, think again.

If you think socks are socks, think again. You spend a third of your life on a mattress, so you want it to be a good one.

You spend a third of your life on a mattress, so you want it to be a good one. A good quality bra that fits is priceless.

A good quality bra that fits is priceless. Professional movers make moving so much less stressful, physically and mentally.

Professional movers make moving so much less stressful, physically and mentally. Cleaners save time, stress, and sometimes even relationships.

Cleaners save time, stress, and sometimes even relationships. Vet bills can be painful, but are 100% worth it.

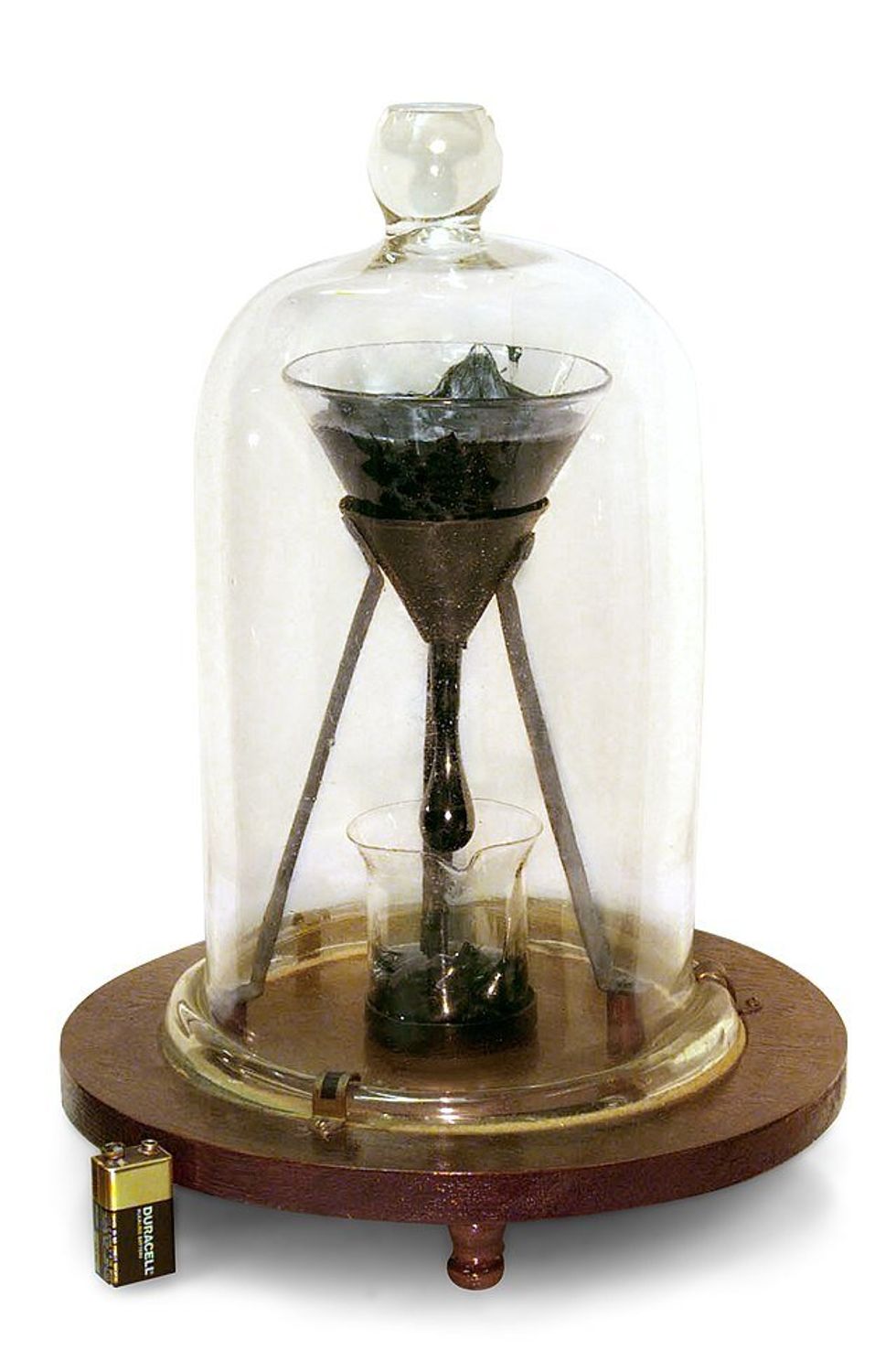

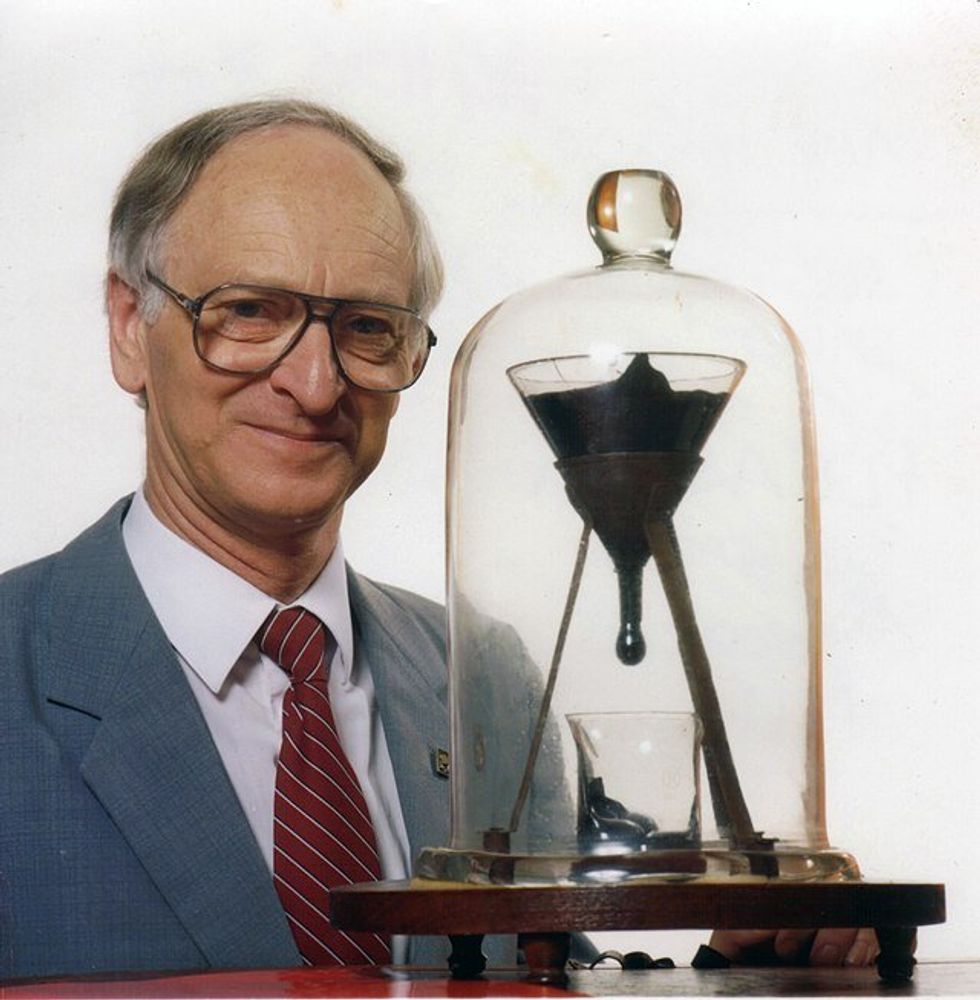

Vet bills can be painful, but are 100% worth it. Pitch moves so slowly it can't be seen to be moving with the naked eye until it prepares to drop. Battery for size reference.

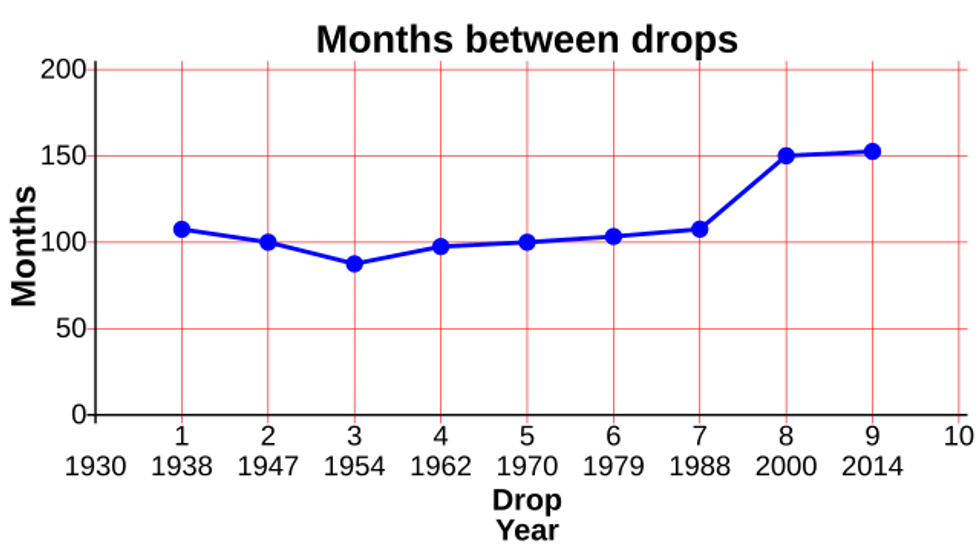

Pitch moves so slowly it can't be seen to be moving with the naked eye until it prepares to drop. Battery for size reference. The first seven drops fell around 8 years apart. Then the building got air conditioning and the intervals changed to around 13 years.

The first seven drops fell around 8 years apart. Then the building got air conditioning and the intervals changed to around 13 years. John Mainstone, the second custodian of the Pitch Drop Experiment, with the funnel in 1990.

John Mainstone, the second custodian of the Pitch Drop Experiment, with the funnel in 1990. Happy Music Video GIF by Chrissy Metz

Happy Music Video GIF by Chrissy Metz Many pets on the street belong to unhoused people.

Photo by

Many pets on the street belong to unhoused people.

Photo by