NVIDIA's CEO realized the smartest people aren't 'technical.' They have a totally different skill.

"People who are able to see around corners are truly, truly smart."

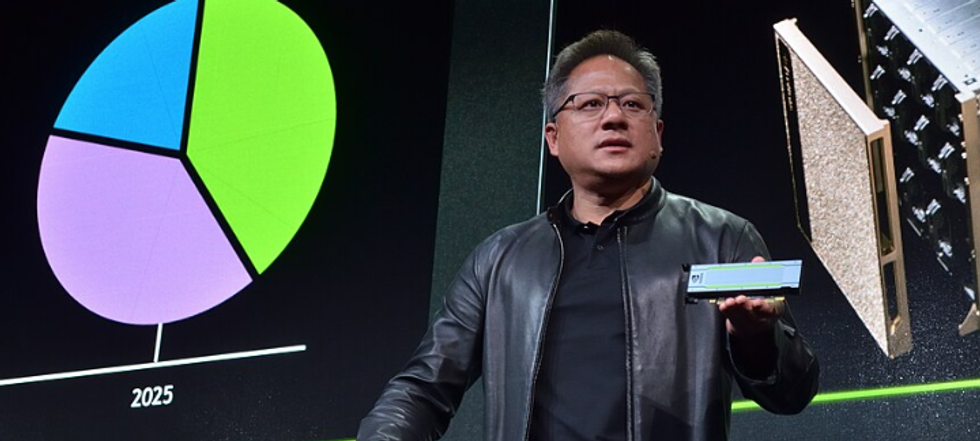

NVIDIA CEO Jensen Huang speaking in 2016.

Artificial intelligence promises to completely upend just about every facet of modern life, from how we work to education, medical care, and the design and manufacture of everyday goods. On a deeper level, it will also change how we see ourselves as humans, placing greater value on the uniquely human skills that no computer can replicate, no matter how powerful the server.

One person who knows a great deal about that is Jensen Huang, the president and CEO of NVIDIA, a company that designs and manufactures chips for accelerated computing and AI data centers. Fortune has named Huang one of the world's best CEOs for his leadership and innovation.

Recently, he appeared on the A Bit Personal podcast with Jodi Shelton, who posed a big question: "Who is the smartest person you've ever met?"

Who is the smartest person Huang ever met?

At first, the question sounds like a softball. Of course, Huang might be expected to name someone with exceptional technical talent or a keen eye for design and engineering. He could even point to an important scientist or a tech leader, such as Steve Jobs. Instead, Huang argues that the most intelligent people today are those whose skills can't be duplicated by AI.

"I know what people are thinking, the definition of smart is somebody who's intelligent solves [technical] problems," Huang responded. "But I find that's a commodity and we're not about to prove that artificial intelligence is able to handle that part easiest, right?"

He added that software engineers were once widely seen as the most intelligent, but AI is now challenging that idea.

Huang says truly intelligent people know the "unknowables"

"I think long term ... and my personal definition of smart is someone who sits at that intersection of being technically astute but [has] human empathy," Huang said. "And having the ability to infer the unspoken around the corners. The unknowables. People who are able to see around corners are truly, truly smart. To be able to preempt problems before they show up, just because you feel the vibe. And the vibe came from a combination of data analysis, first principle life experience, wisdom, sensing other people, that vibe. That's smart. I think it's gonna be the future definition of smart, and that person might actually score horribly on the SAT."

The podcast's Instagram post received hundreds of comments. "This is a very smart answer to make everyone sound like they have a chance of being smartest person," one popular commenter wrote. Another joked, "Bro knows he's the smartest person he's ever met."

Ultimately, as we enter the AI era, it's becoming clear that the edge humans have isn't processing power, but the skills that make us most human: empathy, perception, wisdom, emotional intelligence, and the ability to read the room at both micro and macro levels. Huang understands that true human intelligence, something that can't be created in a data center, is, for now, still the most valuable asset of all.

Watch the full podcast interview below:

- YouTube www.youtube.com