Viral post breaks down how those popular Lensa AI profiles are not as harmless as they seem

'AI art isn't cute.'

"AI art isn't cute."

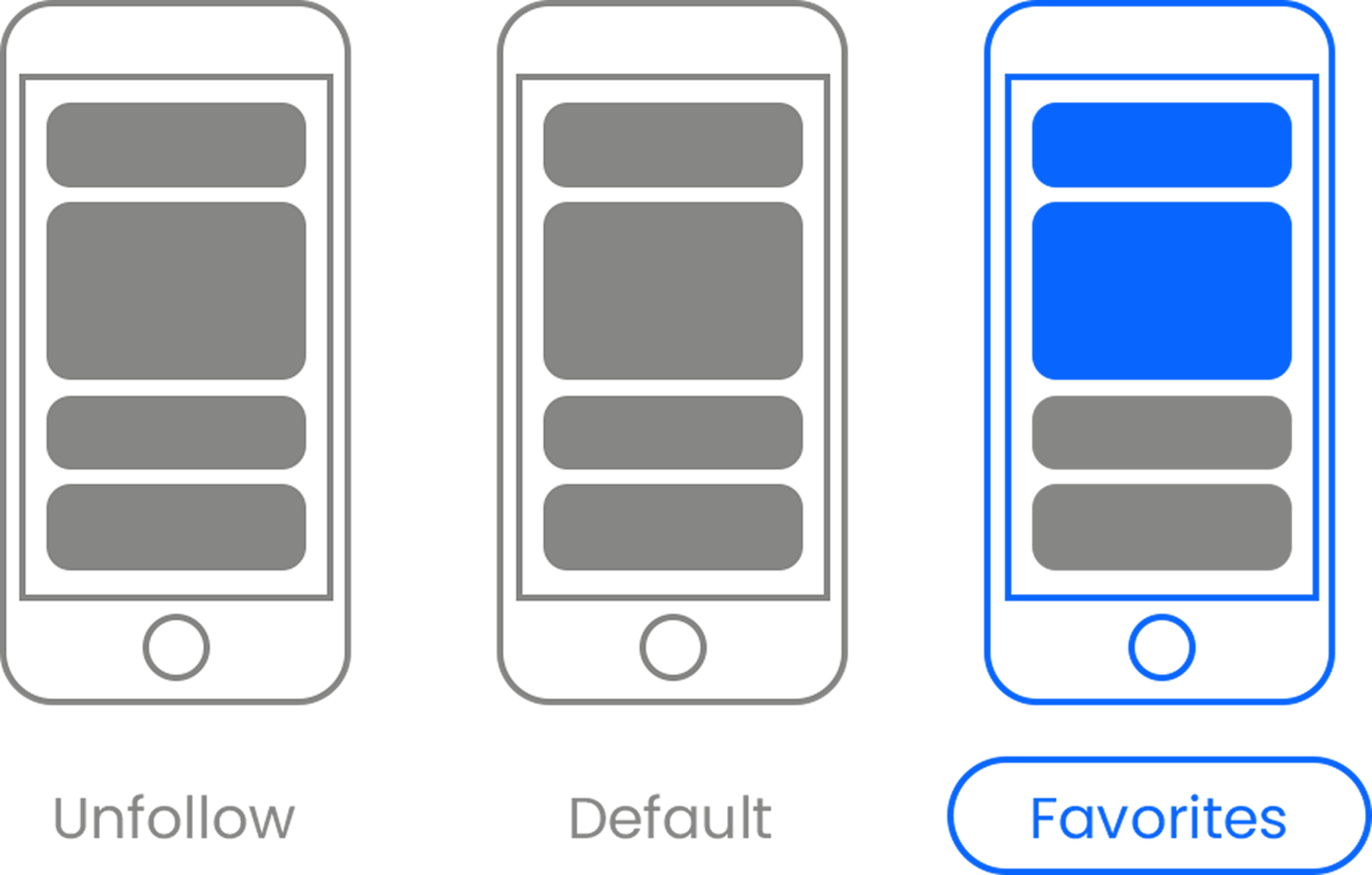

Odds are you’ve probably seen those Lensa AI avatars floating around social media. You know, the app that turns even the most basic of selfies into fantasy art masterpieces? I wouldn’t be surprised if you have your own series of images filling up your photo bank right now. Who wouldn’t want to see themselves looking like a badass video game character or magical fairy alien?

While getting these images might seem like a bit of innocent, inexpensive fun, many are unaware that it comes at a heavy price to real digital artists whose work has been copied to make it happen. A now-viral Facebook and Instagram post, made by a couple of digital illustrators, explains how.

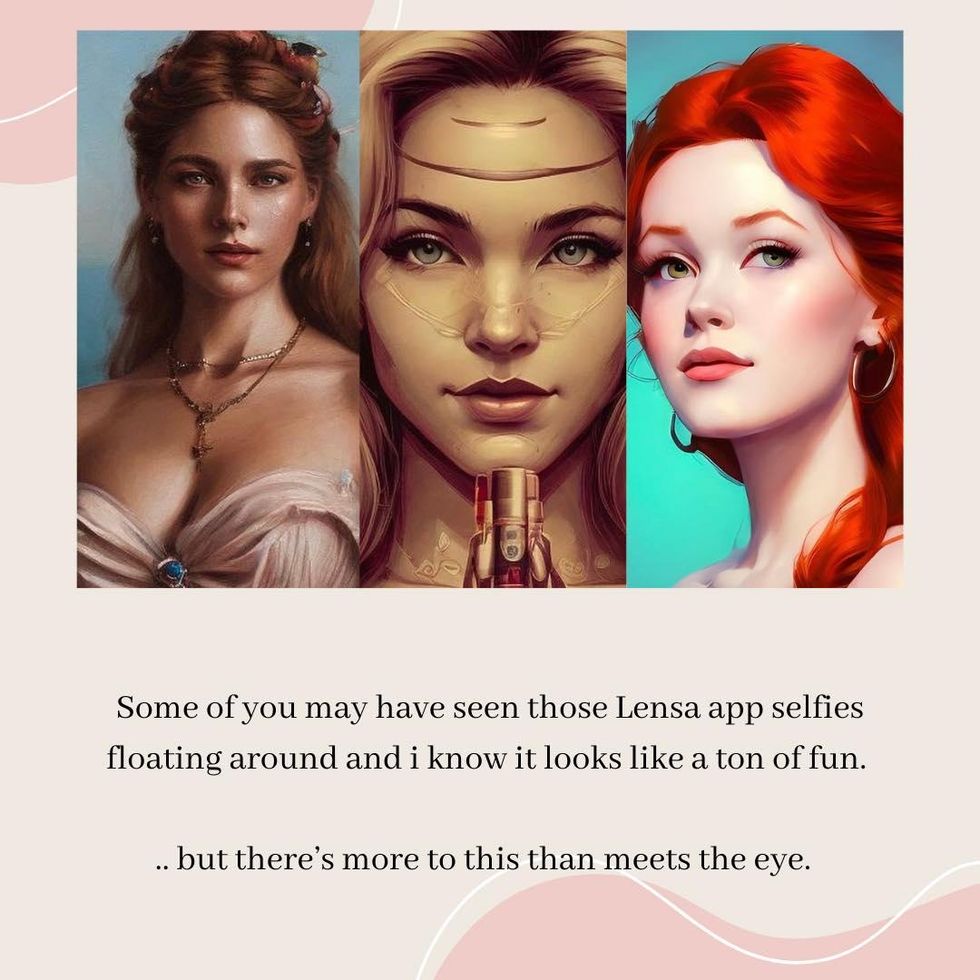

In a very thorough series of slides, Gen Ishihara and her fiance Jon Lam reveal that Lensa is easily able to render those professional looking images by using a Stable Diffusion model, which is more or less an open source (meaning free) program where users can type in a series of words and artificial intelligence will conjure up images based on those typed words. Type out a group of seemingly unrelated words like “ethereal,” “cat,” “comic style” and “rainbow,” and out will pop at least one cohesive, intricate piece of art. All in less than a minute. This foundation is what most mainstream AI art software operates on, by the way—not just Lensa.

"...there's more than meets the eye"

The problem here is that Stable Diffusion has been trained on yet another open source collection of data from a nonprofit called LAION. LAION has more than 5 billion publicly accessible images. If you can find it online, LAION has a picture of it, categorized as “research.”

Not only does this include copyrighted work, but also personal medical records, as well as disturbing images of violence and sexual abuse. But for the sake of not delving too far into darkness, we’ll focus on the copyright issue.

LAION's database includes copyrighted work, personal medical records and disturbing images of violence and sexual abuse.

While LAION might be a nonprofit, Stable Diffusion is valued at $1 billion. And Lensa (which uses Stable Diffusion) has so far earned $29 million in consumer spending. Meanwhile, artists whose work can be found in that database have made zero.

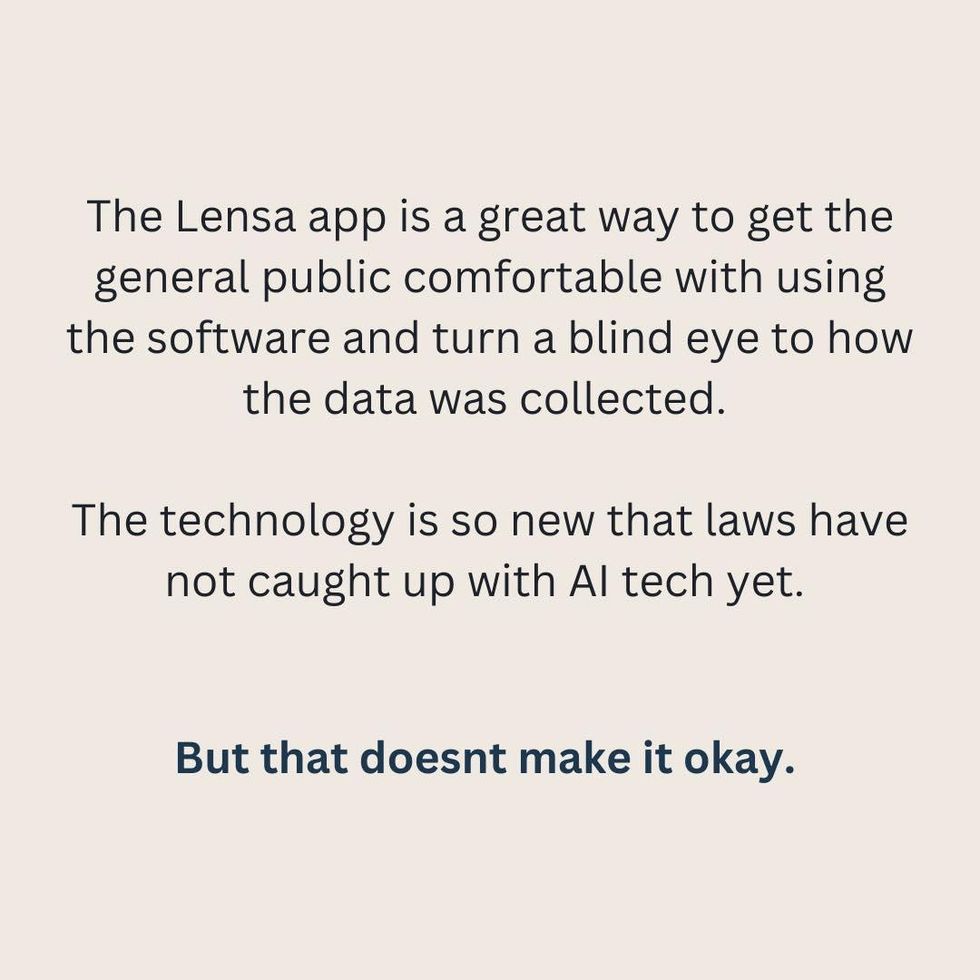

“The Lensa app is a great way to get the general public comfortable with using the software and turn a blind eye to how the data was collected. The technology is so new that laws have not caught up with AI tech yet. But that doesn't make it okay,” the post read.

"The Lensa app is a great way to get the general public comfortable with using the software and turn a blind eye to how the data was collected."

Ishihara and Lam listed real artists who have been affected by Lensa’s use of data laundering, including Greg Rutkowski, whose “name has been used as a prompt around 93,000 times.” He even had his name attached to a piece of AI art that he did not create. Again, simply type in “Greg Rutkowski” along with whatever thing you want illustrated, and the program will create something drawn in his style.

You can see why someone who dedicated a good portion of their life to developing a skill that now can be replicated at a fraction of the effort—and without earning compensation—might not be a huge fan of these trends.

Maybe it's more than harmless fun.

The post then followed up by debunking several pro-AI statements, first pointing out that AI programs are being updated and improved so fast that distinguishing it from the work of human art is becoming impossible—meaning that it does in fact threaten the livelihood of a real artist who simply cannot compete with a machine.

Also shown was a poster created in Midjourney to promote the San Francisco Ballet’s upcoming “Nutcracker” performance, showing that AI media has indeed already begun to replace human jobs.

One of the most common arguments in the AI debate is that all art is derivative, since artists similarly draw inspiration from other sources. While this is true, Ishihara and Lam would contend that the organic process of blending “reference material, personal taste and life experiences to inform artistic decisions” is vastly different than a computer program depending on data that is existing artistic property and then used for commercial purposes without consent.

Adding further credibility to this viewpoint, there’s another post floating around the internet showing Lensa portraits where a warped version of the artist’s signature is still visible (seen below).

I’m cropping these for privacy reasons/because I’m not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist’s signature is still visible. That’s the remains of the signature of one of the multiple artists it stole from.

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022

A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1

Rather than doing away with AI art altogether, what Ishihara, Lam and others are pushing for are better regulations for companies that allow for this technology to coexist with human artists.

This might be easier said than done, but some progress has already been made in that arena. The Federal Trade Commission (FTC), for example, has begun demanding that corporations destroy any algorithm or AI models built using personal information or data collected “in bad faith or illegally.”

Second, they want people to educate themselves. Several other artists agreed that people who have used apps like Lensa aren’t wrong for doing so, but that moving forward there needs to be awareness of how it affects real people. Really, this is pretty much the case for all seemingly miraculous advancements in technology.

And for the creators feeling hopeless by all this, Ishihara and Lam say “whatever you do, don’t stop creating,” adding that it’s more important than ever to not “give up on what you love.”

The conversation around the ethical implications of AI is complex. While posts like these might come across as a form of fear-mongering or finger-wagging, it’s important to gather multiple points of view in order to engage in nuanced conversations and move forward in a way that includes everyone’s well-being.- artist uses ai to create digital encanto portraits ›

- AI technology helps render portraits from the Victorian era that are 'moving' in every way ›

- Artist uses AI to create ultra realistic portraits of celebrities who left us too soon ›

- AI shows how women's fashion has changed over the years - Upworthy ›

- Procreate CEO rejects generative AI, defends human creativity - Upworthy ›