Students show how new smart glasses can steal your information 'in a glance'

They also show how to protect yourself.

As technology advances, it will get harder to tell smart glasses from normal glasses.

It isn't hard to think of ways to misuse smart devices. Since cameras were added to phones, privacy concerns have grown in lockstep with technology. The ability to surreptitiously record a conversation, lurk through someone's social content, or slip an AirTag into a purse have all created traps that are far too easy to fall into. Now, new devices are being released that might complicate things even further.

One duo of Harvard students has demonstrated how combining artificial intelligence, facial recognition, and wearables like smart glasses can open up a new frontier of abuse, allowing the wearer to access an amazing trove of information on a stranger just by looking at them. Fortunately, their research also focused on combatting these new dangers, and they've shared their findings.

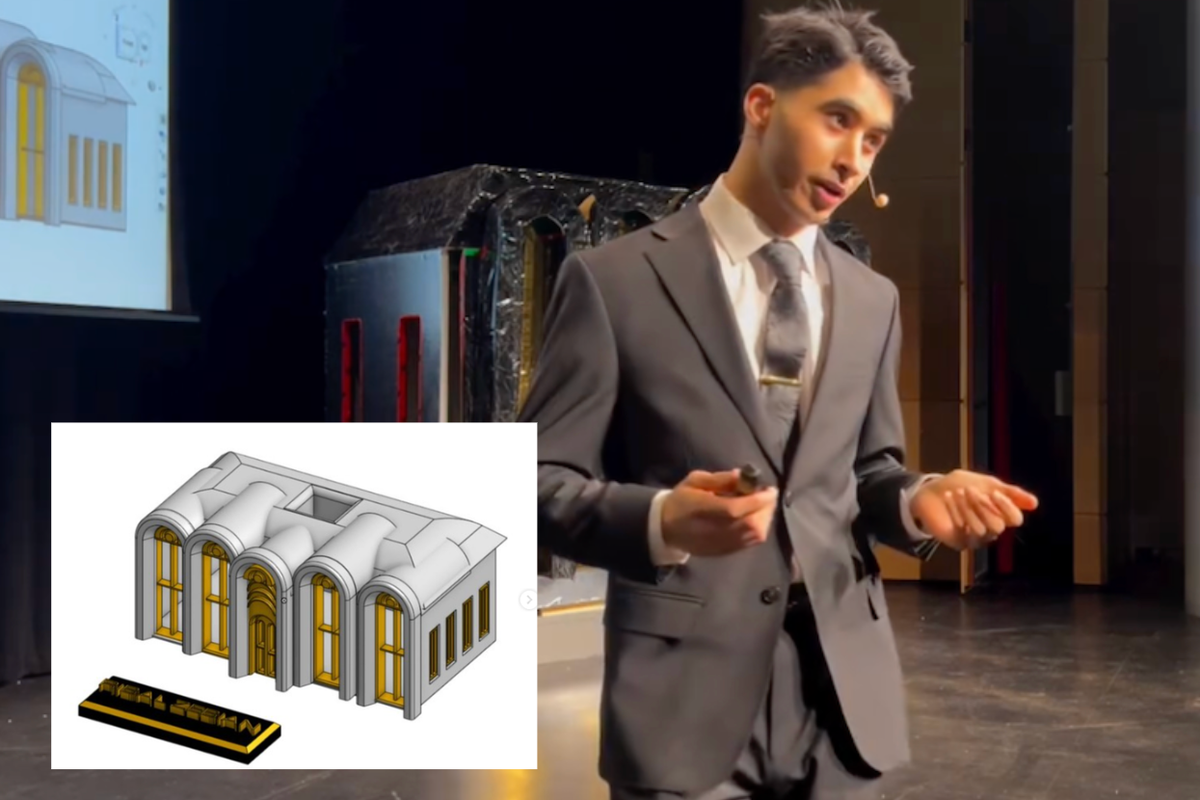

The Harvard students behind the project, AnhPhu Nguyen and Caine Ardayfio, built a program called I-XRAY that uses the Meta smart glasses to livestream video to Instagram. AI software monitors the stream, capturing faces and linking them to information from public databases. In seconds, the tech displays individuals' personal information, including names, addresses, phone numbers, and even names of relatives.

"The purpose of building this tool is not for misuse, and we are not releasing it."

— AnhPhu Nguyen

Nguyen explained that the project isn’t designed to exploit this technology but to show how easily it can be accessed and abused. “The purpose of building this tool is not for misuse, and we are not releasing it,” Nguyen and Ardayfio stated in a document detailing the project. Instead, they hope to raise awareness that the potential for misuse is here — not in a distant, dystopian future.

The dawning age of smart glasses

Since the launch of Google Glass over a decade ago, privacy concerns around smart glasses have been an ongoing issue. New devices like the recently released Ray-Ban Meta smart glasses have reignited the conversation. Google Glass faced significant backlash, and the company ultimately shelved the device partly due to public discomfort with being unknowingly filmed.

While people have grown more accustomed to cameras through social media, the idea of wearable, nearly invisible recording devices still unsettles many. Comments on the demonstration reveal that this discomfort persists:

"Guess in the future we will be wearing face-altering prosthetics to not get doxxed."

— Leek5 on Reddit

Meta has set guidelines for smart glasses users in response to etiquette questions. These include advising people to use voice commands or gestures before filming to ensure others are aware of being recorded. However, as the Harvard students demonstrate, these guidelines rely on individuals’ willingness to follow them — a tenuous safeguard at best.

AI’s role in connecting the dots

Nguyen and Ardayfio’s project relies heavily on large language models (LLMs), which use AI to identify connections among large data sets. I-XRAY can rapidly retrieve personal details by analyzing relationships between photos and database entries. This capability, combined with real-time video streaming from the glasses, demonstrates how easily AI can connect scattered pieces of public data into a full profile of an individual.

In a video released to X, the students demonstrate the abilities of their app. Most people identified by the technique react with uncomfortable laughter or astonishment. While Nguyen and Ardayfio emphasize that they have no intention of releasing this technology, their project raises serious ethical questions about the future of AI and facial recognition. The fact that two college students could develop such a tool suggests that the technology is well within reach for anyone with basic resources and programming knowledge.

Steps you can take to protect your privacy

Fortunately, the creators of I-XRAY have outlined steps you can take to protect yourself from similar invasions of privacy. Many public databases like PimEyes and people-search sites allow individuals to opt out, though the process can be time-consuming and not always fully effective. Additionally, they recommend freezing your credit with major bureaus and using two-factor authentication to prevent potential identity theft. Here are some practical steps to consider:

- Remove yourself from reverse face search engines – Tools like PimEyes and FaceCheck.id allow users to request removal. While this may not fully protect your privacy, it limits some exposure.

- Opt out of people search engines – Sites like FastPeopleSearch, CheckThem, and Instant Checkmate allow individuals to opt out. For a comprehensive list, The New York Times has published an extensive guide.

- Freeze your credit – Adding two-factor authentication and freezing your credit can protect your financial identity from SSN data leaks.

"Time to start wearing my Staticblaster foil jacket and my handy EMP-Lite Boombox whenever I get onto public transport."

— Just_Another_Madman on Reddit

As these technologies evolve, robust privacy protections will be essential to prevent misuse, and awareness of privacy risks can help individuals make informed choices about their digital and physical security. The creators of I-XRAY remind us that awareness and action are our best tools in this era of advancing surveillance tech.

- Scientists tested 3 popular bottled water brands for nanoplastics using new tech, and yikes ›

- 'Deaf cyborg' can barely contain joy after trying live caption glasses ... ›

- 14 blind people restored their vision after using eye implants made from pigskin ›

- Why are Ray-Ban sunglasses in so many movies? - Upworthy ›

In a 4-day model, kids often (but not always) receive less instructional time. Photo by

In a 4-day model, kids often (but not always) receive less instructional time. Photo by

Flags can be a symbol of both patriotism and nationalism.

Flags can be a symbol of both patriotism and nationalism. Love of one's country is nice. But there's a difference between patriotism and nationalism.

Love of one's country is nice. But there's a difference between patriotism and nationalism.  Mark Twain had quite a bit to say about patriotism.

Mark Twain had quite a bit to say about patriotism.

Retro vibes with bold colors and music! 🎶✨ #80sFashion

Retro vibes with bold colors and music! 🎶✨ #80sFashion Confused expression with a questioning gesture.

Confused expression with a questioning gesture. Students eagerly participate in a classroom discussion.

Students eagerly participate in a classroom discussion.