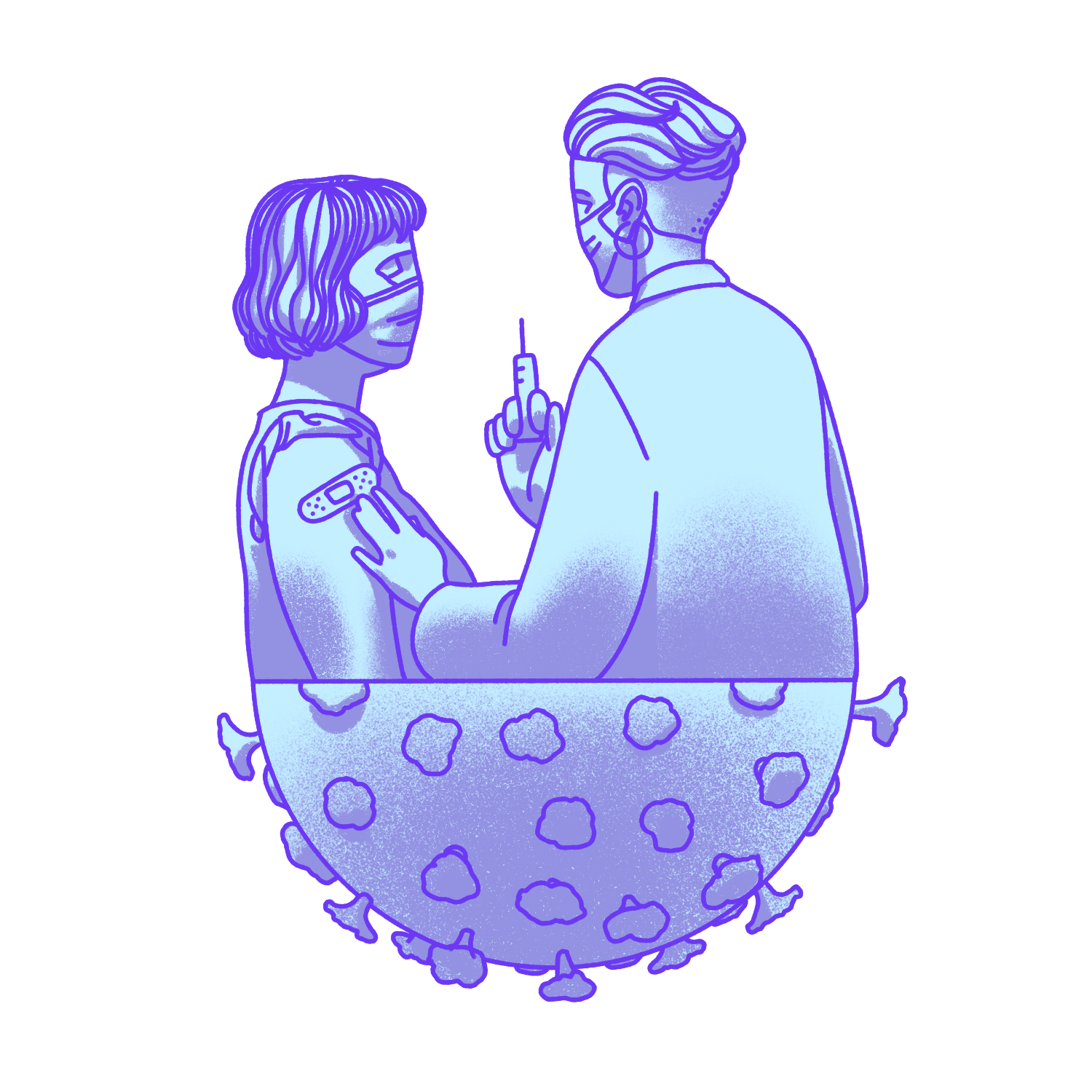

Before vaccination, cultures across Africa and Eastern Asia practiced something called inoculation to prevent smallpox. With inoculation, people would grind up smallpox scabs and ingest them or transfer pus from a smallpox pustule through a skin lesion on a healthy person. The idea was that this would result in a milder, more survivable infection that would give the person immunity once they recovered. For centuries, inducing a mild illness through inoculation was the likeliest way to survive disease.

Smallpox was one of the world’s deadliest diseases, and it struck Boston in an epidemic during 1721. An enslaved man from West Africa named Onesimus shared with the Bostonians how he had been inoculated as a child in his homeland – a concept totally foreign to Americans. While some derided the concept as “African folk medicine,” inoculation started to catch on after local doctors began inoculating their patients. They found inoculated patients survived the disease at much higher rates than those who weren’t inoculated. Thanks to Onesimus, the practice became widespread throughout New England in the years after.

In the late 18th century, an English doctor named Edward Jenner took the concept of inoculation and improved it – making it safer and more accessible to the rest of the world. Jenner discovered that local milkmaids who survived a disease called cowpox (a viral skin infection) were then immune to smallpox, its more deadly cousin. To test his hypothesis, Jenner took the pus from a cowpox blister found on a local milkmaid's hands and injected it into the arm of a young boy. When Jenner later tried to infect the boy with smallpox through inoculation, they discovered he was immune. Jenner created the word “vaccine” from the Latin word vacca, meaning cow.

This was a game-changer: Now people could be immune to a deadly disease without the risk of catching the disease itself or spreading it to other people.

French microbiologist Louis Pasteur theorized that if vaccination could prevent smallpox, it could prevent other diseases as well. When Pasteur set out to create a vaccination for chicken cholera, he discovered something that would change the course of disease prevention forever. Live microbes, he realized, like the Pasteurella multocida bacterium that caused chicken cholera, became weaker when exposed to oxygen or heat. When Pasteur injected chickens with attenuated (or weakened) bacteria, the chickens developed mild symptoms, recovered, and became immune to the disease.

With this discovery, it became possible for vaccinated people to have immunity to a disease after experiencing only mild side effects and minimal risk of developing the full-blown disease. Pasteur went on to develop live-attenuated vaccines for anthrax and rabies as well.

By 1918, vaccines had already been established as a life-saving scientific achievement. But researchers still had issues preserving the live viruses in the vaccine against heat, meaning they couldn't readily be shipped or easily distributed to warmer-climate areas of the world without being ruined.

When the Vaccine Institute in Paris rolled out freeze-dried vaccines, doctors were able to transport vaccines to places like French Guiana (South America) and other tropical parts of the world under control of the French empire at the time. Without shelf-stable vaccines, millions of people in warmer climates would have been left unprotected, and widespread vaccination campaigns would not have been successful.

Some diseases, such as diphtheria (a serious infection of the nose and throat), measles, and pertussis (whooping cough), have a high risk of serious illness and death among children. To prevent the spread of these diseases, many schools in the United States enacted vaccination requirements in the beginning of the 20th century, in which children had to produce a certificate of vaccination if they wanted to attend. Between 1900 and 1998, widespread vaccination programs helped reduce the death rate due to childhood infectious diseases in the U.S. from 61.6% to just 2%.

Because the 1918-1919 flu pandemic had been so deadly among U.S. soldiers, developing a vaccine for influenza quickly became a high priority for the U.S. military. The first clinical trials for an experimental flu vaccine, tested mainly in military recruits and college students, kicked off during the 1930s.

In the early 1940s, scientists discovered something that put a hitch in their plans for vaccination – a second strain of influenza called influenza virus type B. Because the virus in the vaccine did not match the most widespread circulating strain, it meant that the original vaccine became less effective in preventing illness.

Thankfully, in December 1942, scientists developed a combination vaccine that contained inactivated (or killed) influenza viruses, both type A and B. The combination vaccine was approved and rolled out in 1945.

Smallpox was still killing millions every year when the WHO launched the world's first coordinated effort to eradicate the disease. After two decades of global vaccination and surveillance, spearheaded by a team of international doctors and scientists, everyone's hard work paid off: the WHO declared in 1980 that smallpox had been officially eradicated – the only human disease ever known to completely disappear from Earth.

On a single January day in 1997, health workers vaccinated 127 million children against polio in India, a country struggling to control the disease. The following year, another 134 million would be vaccinated in a single day. From 1988 to 2000 cases were reduced by 99%.

The aim was to encourage manufacturers to lower vaccine prices for the poorest countries in return for long-term, high-volume and predictable demand from those countries. Since its launch, the program is estimated to halved the number of child deaths and prevented 13 million deaths.