"We suck at dealing with abuse and trolls on the platform and we've sucked at it for years," said former Twitter CEO Dick Costolo in a leaked 2015 memo.

"It's no secret and the rest of the world talks about it every day," he continued. "We lose core user after core user by not addressing simple trolling issues that they face every day."

It was a surprising admission about one of the service's biggest flaws. On Twitter, sharing other users' posts as easy as a single touch, but that also creates the opportunity for regular people going about their day on Twitter to wind up the target of harassment from thousands of other users at a moment's notice.

Former Twitter CEO Dick Costolo. Photo by Kevork Djansezian/Getty Images.

Since the memo leaked, Twitter has been trying to solve some of the more obvious problems by adding a quality filter meant to weed out threatening messages from spam accounts and creating a path to account verification. Still, these steps weren't enough, and the torrent of abuse its users experience was hurting the company financially.

This week, Twitter announced a number of new tools meant to address the abuse problem, and they look like they might be pretty helpful.

(Trigger warning: Hate speech and transphobic slurs follow below.)

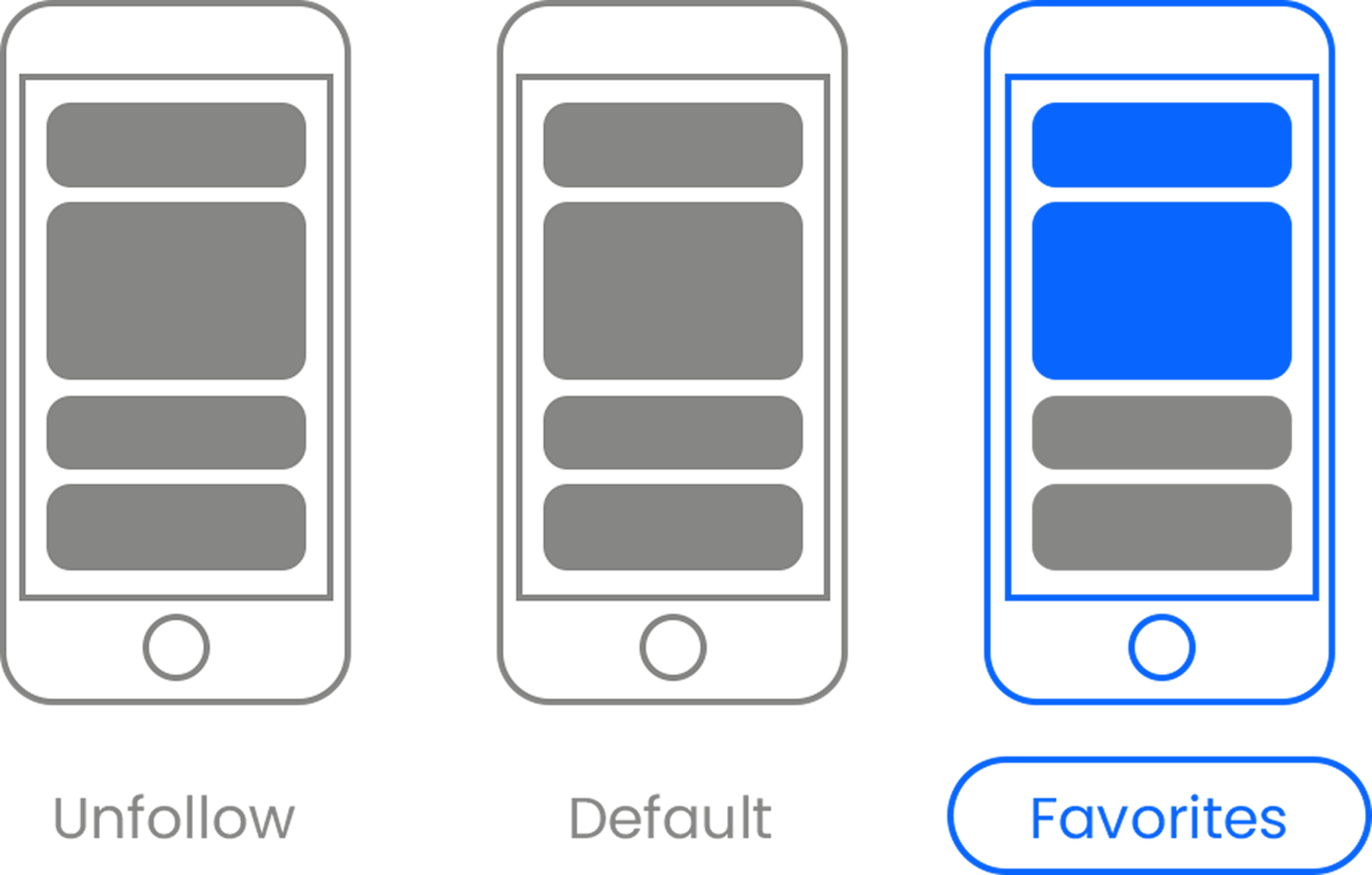

For one, they're expanding the mute feature so users can opt out of conversations and notifications including certain words. This is a big step forward since one of the bigger problems targets of harassment deal with is the aftermath of a tweet that's getting too much attention from the wrong crowd.

More importantly, Twitter is clarifying its terms of service, making it easier for users to file a report, and providing training to support staff to ensure they're up to date on the latest policies. The hateful conduct policy bans abusive behavior targeting people on the basis of race, ethnicity, national origin, sexual orientation, gender, gender identity, religious affiliation, age, disability, or disease.

What does online harassment look like, anyway? Here's some of what I get sent my way on a regular basis:

It's not especially fun, but while hurtful, it's usually not too bad.

Other times, though, the messages contain violent threats. For example (and this gets a little graphic):

The problem is that in many of these cases, there hasn't been anything a user could do to prevent these messages from coming.

You can block a single user, but once they've shared your tweet with everyone who follows them, chances are their followers will come after you. It's an infinite loop of blocking people. You can also report violent threats as abusive, but once Twitter goes through the process of reviewing the report, they often reply with a simple note saying that the tweets reported didn't violate their terms of service.

This is by no means limited to Twitter. Facebook has a similar problem with its reporting process, coming off as ineffective and sometimes arbitrary.

It's all somewhat understandable. As businesses, Facebook and Twitter have to find a balance between protecting free and open speech and shielding users from harm. Within hours of the announcement of new anti-harassment tools, Twitter suspended a number of high-profile accounts known for engaging in targeted harassment — that's certainly a start.

With new technology changing our world all the time, it's important that we remember to treat one another as human beings. Anti-bullying efforts will play a crucial role in years to come.

Whether it's on Twitter, Facebook, or in virtual reality spaces, online privacy and harassment protection plays an important role in society. It's easy to forget that there is a real human being on the other end of these online conversations. It's easy to treat them as undeserving of respect. It's easy to lose empathy for the world.

It's harder to do the right thing, but that doesn't change that it is, in fact, the right thing.

This is a teacher who cares.

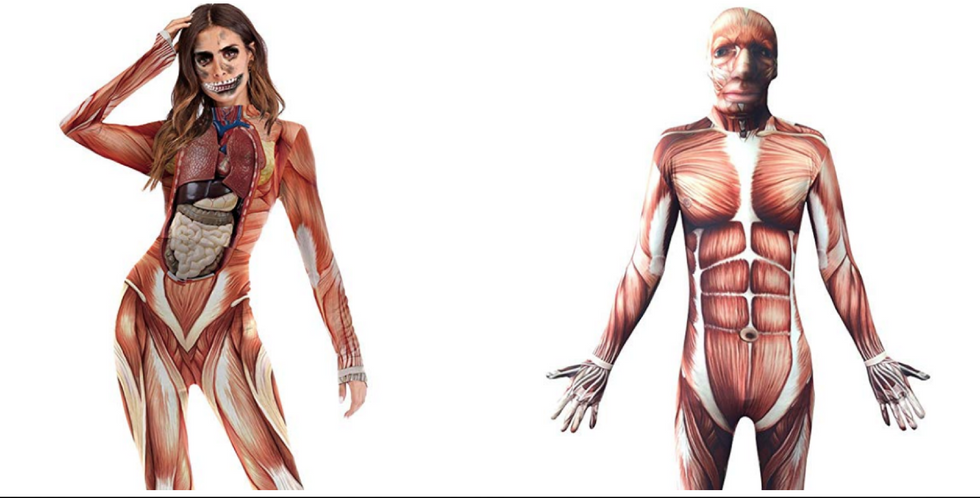

This is a teacher who cares.  Halloween costume, check.

Halloween costume, check.  The first deposit of orange peels in 1996.

The first deposit of orange peels in 1996. The site of the orange peel deposit (L) and adjacent pastureland (R).

The site of the orange peel deposit (L) and adjacent pastureland (R). Lab technician Erik Schilling explores the newly overgrown orange peel plot.

Lab technician Erik Schilling explores the newly overgrown orange peel plot. The site after a deposit of orange peels in 1998.

The site after a deposit of orange peels in 1998. The sign after clearing away the vines.

The sign after clearing away the vines. An adorable French Bulldogvia

An adorable French Bulldogvia  french bulldog gifofdogs GIF by Rover.com

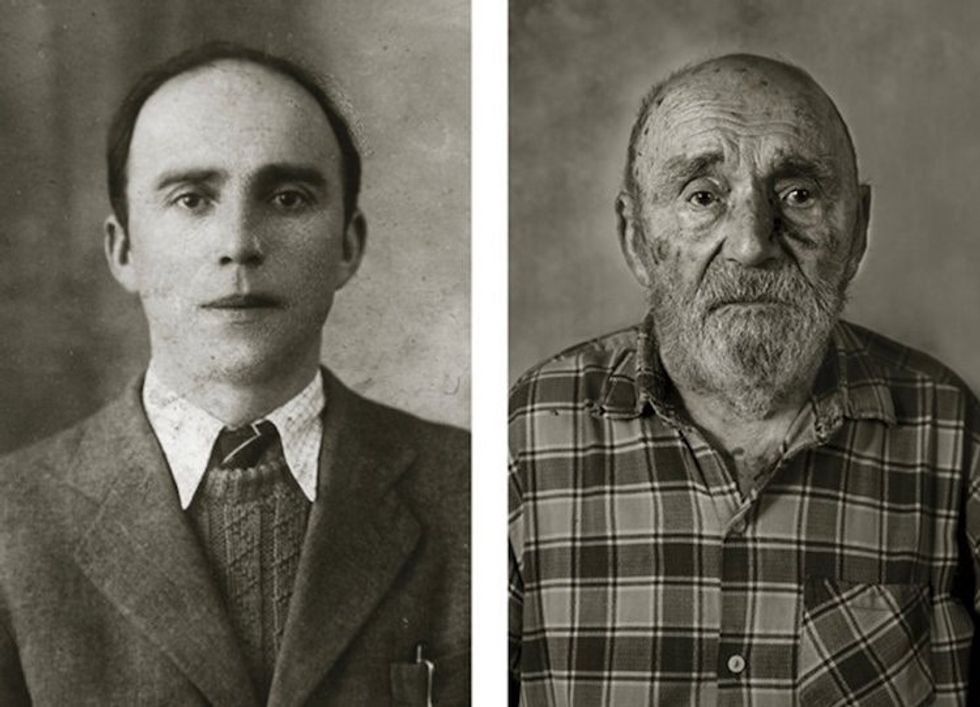

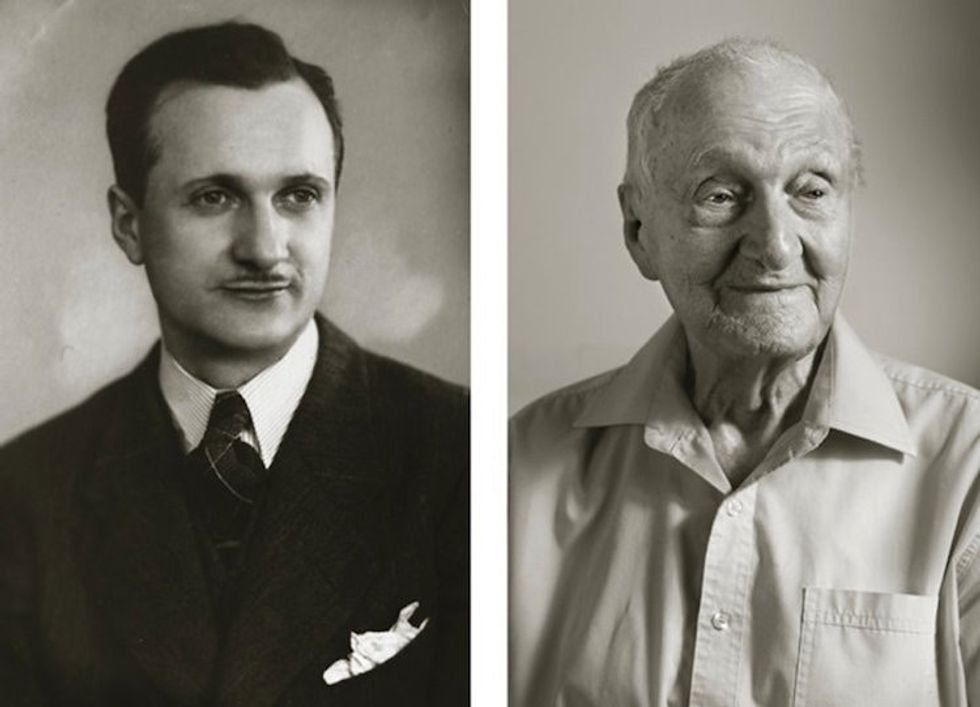

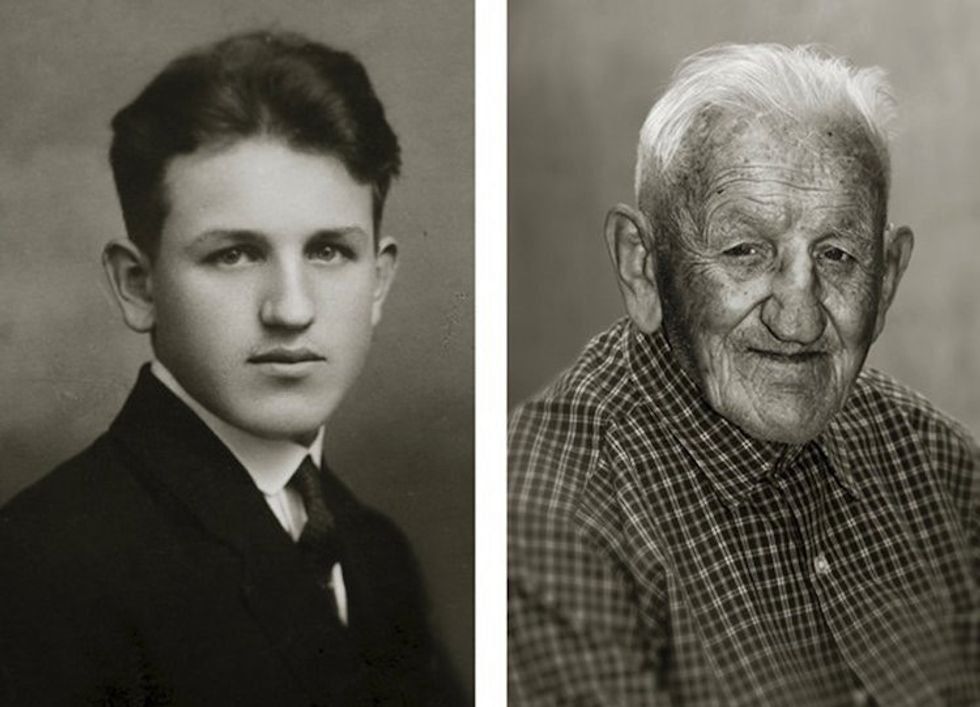

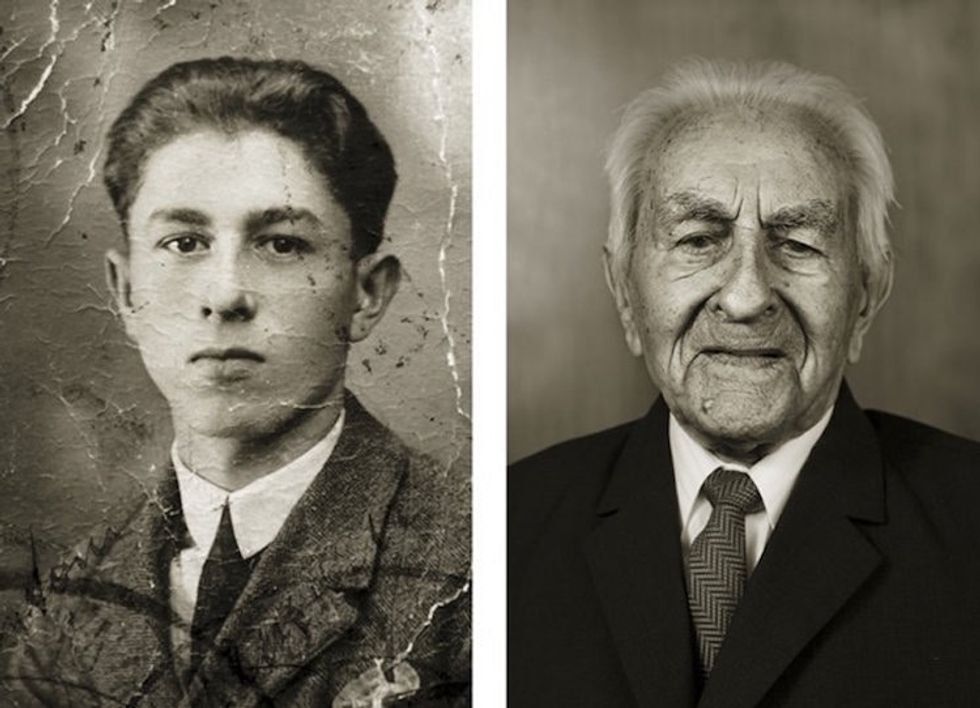

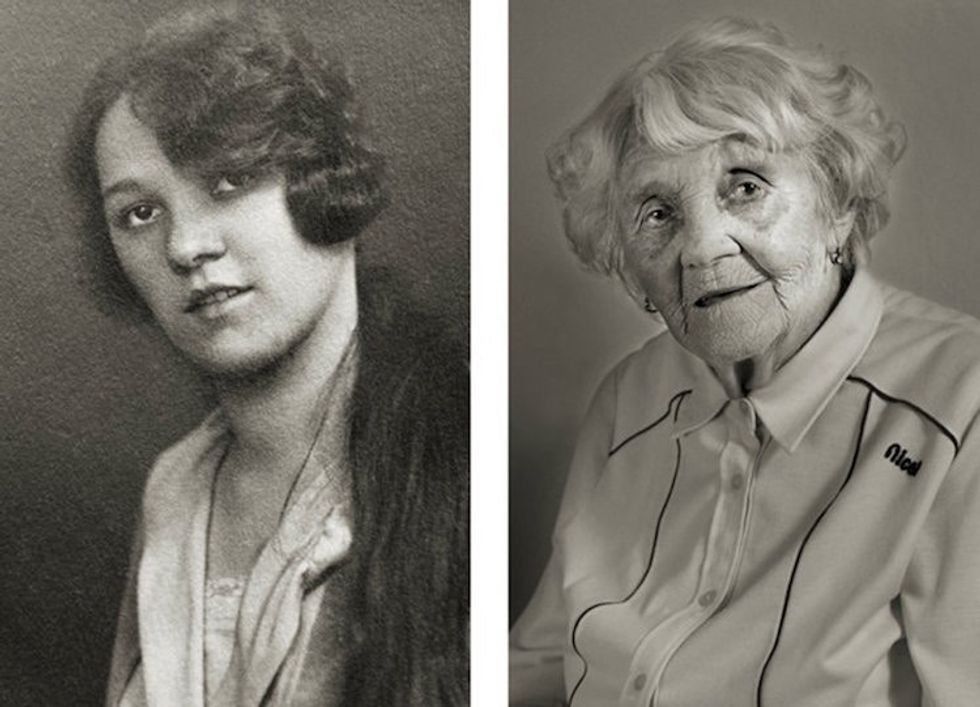

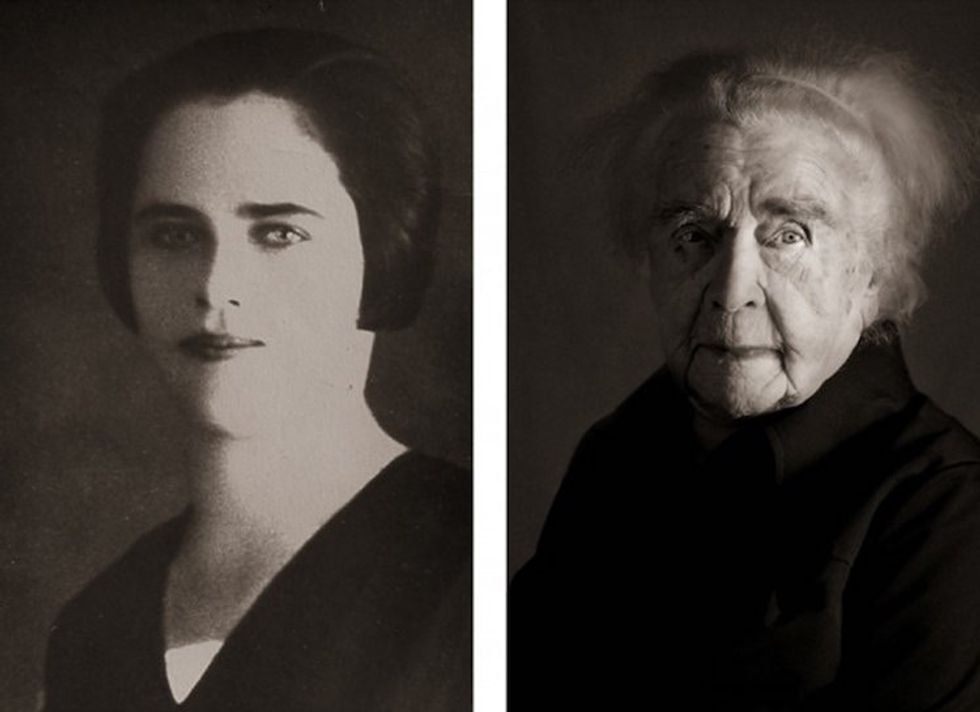

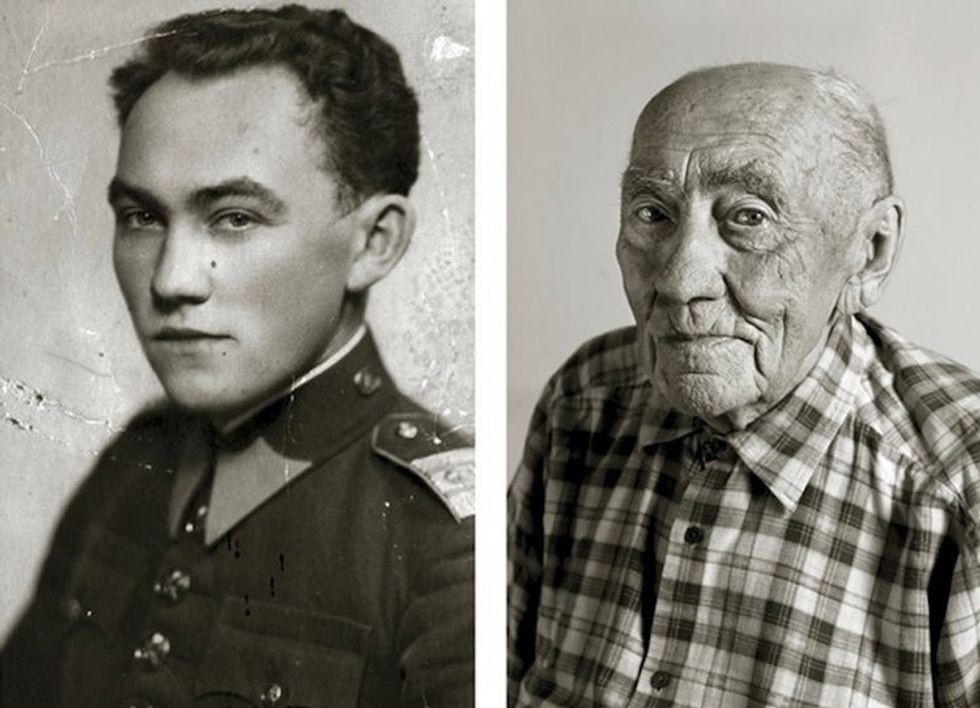

french bulldog gifofdogs GIF by Rover.com Prokop Vejdělek, at age 22 and 101

Prokop Vejdělek, at age 22 and 101