It was the 1990s and the Disney Animation department seemed unstoppable. Over the past decade, Walt Disney Studios Chairman Jeffrey Katzenberg and his army of directors, writers, and animators worked tirelessly to produce a shiny new animated movie for Disney every year, sometimes two or three times yearly. And by all accounts, it was working. These “little hits” they were pumping out for the big boss? Box-office miracles, all of them. Disney had cornered and perfected this market so well that audiences started to forget there were other animation studios out there. The time was known as the Disney Renaissance, and could you blame them? Between 1989 and 1999, Disney Animation truly had the Midas touch, and every movie they produced was imbued with once-in-a-generation movie magic.

Disney made magic happen in the 90s. Giphy

Disney made magic happen in the 90s. Giphy

It began with The Little Mermaid in 1989 and was followed quickly The Rescuers Down Under in 1990 and Beauty and the Beast in 1991. Aladdin (1992) followed, then The Nightmare Before Christmas (1993), The Lion King (1994), A Goofy Movie (1995), Pocahontas (also 1995), The Hunchback of Notre Dame (1996), Hercules (1997), Mulan (1998), and to cap off this incredible dynasty, Tarzan in 1999. Sprinkled between these hits were more hits from Disney's other studio, Pixar (Toy Story in 1995, Toy Story 2 in 1999, A Bug's Life in 1998). Though not technically part of the Renaissance, these hits only added to Disney's bank of classics.

The success seemed to make the team confident they could start doing stranger, quirkier films that strayed from the current cookie-cutter Disney model. But that hubris would lead to an epic battle within the studio. Though Katzenberg was dismissed in 1994, his mark had been made and the Renaissance was still moving ahead one until one of the most fraught productions in Disney’s history. Filled with creative clashes, drastic rewrites, personnel changes, and a rushed timeline, this project had the odds stacked heavily against it. That movie was Kingdom of the Sun.

Or, that’s what it would have been called if everything went according to plan. On December 10, 2000, at the El Capitan Theater in Los Angeles, Disney premiered The Emperor’s New Groove, a zany, highly funny comedy about a selfish young emperor who is accidentally transformed into a llama by his treacherous advisor. To return to his human form, he must rely on a peasant from the village, Pacha, whom he’s already wronged before the initial plot is underway. On paper, this had the potential to be a masterpiece. The cast was beyond stacked. David Spade playing the most David Spade-est characters of all time, a tart, conceited 17-year-old brat emperor named Kuzco; Eartha Kitt (!!) played Yzma, the emperor’s diabolical elderly advisor who secretly wishes to usurp him; and John Goodman played Pacha, the noble and kind village-dweller who must bring Kuzco back and return him to his human self. At the time, audiences were confused by the lack of grandeur, coming off the heels of Tarzan. (Although The Emperor’s New Groove received generally positive critical reviews, by Disney standards, it had underperformed, grossing $169.5 million on a $100 million budget.)

- YouTubewww.youtube.com

But back to Kingdom of the Sun. In 1994, fresh off the gigantic success of The Lion King, Disney Studios' President at the time, Thomas Schumacher, handpicked the movie’s original director, Roger Allers, to lead their next film, which would explore an ancient culture such as the Incas, Aztecs, or Mayans. Allers, alongside co-writer Matthew Jacobs, dreamt up an epic tale set in Peru, where a greedy emperor (voiced by Spade), bored by life at the palace, would trade places with a similarly looking peasant (to be voiced by Owen Wilson), resembling Mark Twain’s novel, The Prince and the Pauper. There were a few other Disney tidbits thrown in, like an evil god of death (Kitt) who sought to destroy the sun, and two love interests: the emperor’s betrothed fiancée Nina, and a llama-herder named Mata (voiced by Laura Prepon). It was perfectly lovely and entirely safe, ideal for its studio. James Berardinelli and Roger Ebert’s book, The Reel Views 2, describedKingdom of the Sun as a “romantic comedy musical in the ‘traditional’ Disney style.” The team even traveled to Machu Picchu in 1996 to immerse themselves in Incan culture and study artifacts and architecture.

This did not go over well with the studio. First, they had already done a version of The Prince and the Pauper, a Mickey Mouse short from 1990 that the studio paired with various at-home releases. The idea felt tired. They needed more, especially considering the “underperformances” of Pocahontas and The Hunchback of Notre Dame. Disney execs were worried that Kingdom of the Sun would fall into the same trap: too self-serious and too ambitious (they had flown to Machu Picchu, c’mon!). So, they called Mark Dindal to come in and punch up the material, naming him co-director. This is where things start to go a bit haywire.

Suddenly, the original director, now co-director, Roger Allers, calls up the singer Sting to compose songs for the movie. He agrees, under one condition: his wife, Trudie Styler, can come along and document the process (more on this later). They settled on the terms, and Sting and his collaborator began to work on eight original songs, each of which is “inextricably linked with the original plot and characters.” Only two songs made the final cut when The Emperor’s New Groove premiered, with three added to the soundtrack CD as bonuses. By the summer of 1998, Disney’s studio executives began to crash out.

Turns out, the film was in shambles.Giphy

Turns out, the film was in shambles.Giphy

The film was in shambles, nowhere near where it should be for a 2000 release—and it had to be released then, due to various crucial promotional deals Disney had set up with Coca-Cola and McDonalds. Allers had gone wild, overstuffing the film with too many plot elements, eager not to repeat the tired Disney “formula”: a hero, a villain, and a love song. In a panic, the Disney executives devised a plan that would later be known infamously as a “Bake Off.” The crew was split in two.

“They gave Mark Dindal a small crew, and me a small crew, to come up with two different versions of the story. Which is just kind of awful to compete against each other,” recalls Allers to Vulture. On one side was Allers, who proposed a complex yet emotionally moving film that had the potential to rival The Lion King. Dindal was on the other side, but his pitch didn’t involve story elements or characters being cut. He suggested an entirely different movie. According to storyboard artist Chris Williams, “Even more than probably pitching a story or new characters, we were pitching a tone. We were suggesting a radically different tone than what Kingdom of the Sun had been. A lot of what was funny about it was just how preposterous it was. And I’d never heard Tom and Peter laugh before. They were almost literally on the floor laughing.”

It wasn't really a pitch, like the movie ended up being, it was funny.Giphy

It wasn't really a pitch, like the movie ended up being, it was funny.Giphy

Roger Allers saw what was happening—this was the movie—and left the project. It was September 1998, and Disney had already wasted $30 million of its $100 million budget. Oh, and only 25% of the film was animated. They had about a year to pull off the heist of the century.

When they emerged from the “Bake Off,” Dindal and producer Randy Fullmer halted production for six months. They returned with The Emperor’s New Groove,a buddy comedy set loosely in Peru. It had become an entirely new film: most of the cast, except Spade and Kitt, had been fired. It was leaner: fewer characters, simpler backgrounds. They squeaked by the deadline and somehow managed to release a movie that year.

And that would have been the end of it. Kind of weird and tonally different Disney film breaks even. Nothing more to see. That is, if not for Sting’s wife, documentarian Trudie Styler, who had been capturing all of the drama from the sidelines. Remember Sting’s ultimatum at the beginning? Styler kept her promise, creating a documentary called The Sweatbox, named for the screening room at Disney Studios in Burbank that had no air conditioning and caused the animators to sweat while their work was being inspected. What began as a behind-the-scenes vanity project for her husband transformed into an unprecedented glimpse behind the curtain at the corporate dysfunction at Disney.

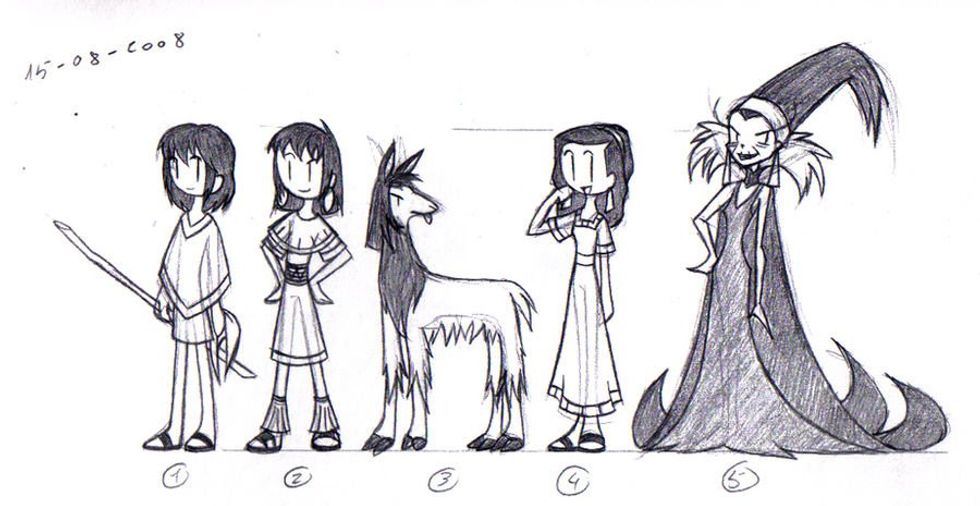

Sketches from Kingdom of the SunDeviantArt

Sketches from Kingdom of the SunDeviantArt

But while The Emperor’s New Groove has found a niche cult following since its release, The Sweatbox can’t be found… anywhere. The 86-minute documentary, which Disney approved, was initially scheduled for release in 2001 and even enjoyed a few screenings, including a worldwide premiere at the Toronto Film Festival and an unpublicized one-week run at the Loews Beverly Center Cineplex in Los Angeles. Reporting on The Sweatbox, Wade Sampson wrote:

“The two executives did come across as nerdy bullies who really didn’t seem to know what was going on when it came to animation and were unnecessarily hurtful and full of politically correct speech. They looked like the kids in high school that jocks gave a “wedgie” to on a daily basis. How much of that impression was due to editing and how much was a remarkable, truthful glimpse is up to the viewer to decide.”

Now and then, a brave vigilante

dares to post a clip of

The Sweatbox online, but Disney always removes it. But it’s funny how things end up: after the first screening of

The Emperor’s New Groove, someone from Disney leadership said, “We’ll never make that kind of movie again.” Yet, since then, Disney has released a direct-to-video sequel named

Kronk’s New Groove, an animated TV series, and various games based on that “cursed movie.”

Seems like the Emperor didn't throw off Disney's groove after all.

Courtesy of Kerry Hyde

Courtesy of Kerry Hyde Courtesy of Kerry Hyde

Courtesy of Kerry Hyde Courtesy of Kerry Hyde

Courtesy of Kerry Hyde Courtesy of Kerry Hyde

Courtesy of Kerry Hyde Mama Said Love GIF by Originals

Mama Said Love GIF by Originals hallmark hall of fame mother daughter GIF by Hallmark Channel

hallmark hall of fame mother daughter GIF by Hallmark Channel you're safe here season 4 GIF by Portlandia

you're safe here season 4 GIF by Portlandia Busy Philipps Tonight GIF by E!

Busy Philipps Tonight GIF by E! GIF by Better Things

GIF by Better Things A newborn baby with her Teddy bear. (Representative image)via

A newborn baby with her Teddy bear. (Representative image)via  Disney made magic happen in the 90s.

Disney made magic happen in the 90s.  Turns out, the film was in shambles.

Turns out, the film was in shambles. It wasn't really a pitch, like the movie ended up being, it was funny.

It wasn't really a pitch, like the movie ended up being, it was funny. Sketches from Kingdom of the Sun

Sketches from Kingdom of the Sun

Season 10 Episode 3 GIF by Friends

Season 10 Episode 3 GIF by Friends

Some people would prefer to just not be tan than not fully clean themselves.

Some people would prefer to just not be tan than not fully clean themselves.